Compiled by: Kyle

Review: Lemon

Source: Content Guild - News

Originally published on: PermaDAO

Original link: https://permadao.notion.site/AO-AO-Core-HyperBeam-1a527607c4398036bbfae5c04bdc0440?pvs=4

Hello everyone, thank you very much for joining us today. We are very excited this time because we can start to onboard the first batch of node operators. Today I will walk you through what AO-Core is, which is the basic primitives of AO. I will also introduce how to run it on your own devices, as well as the future development roadmap and our current progress.

About AO

The first thing to understand is that AO is a hyper-parallel computer. Its execution environment is very different from traditional smart contracts. Therefore, running a node for this new environment is also different, and not much like running an Ethereum validator node or any other blockchain.

AO's node operators can make many decisions, and the users of the system choose which devices should perform computing tasks by forming a market with the node operators. To make this clear, we will first understand the functions of AO-Core from a technical perspective.

What is AO-Core from a technical perspective?

To properly understand AO-Core, we need to get back to the essence of blockchain. It may be better to put aside your current understanding of blockchain for a while, so that it may be easier to understand how this new system works. Then we will gradually explore what a decentralized network looks like in practice.

If we look at it from a high perspective—like 30,000 feet or 100,000 feet—what is the Internet?

In simple terms, the Internet is a distributed machine consisting of many participants running in parallel. And every connection between these machines requires trust.

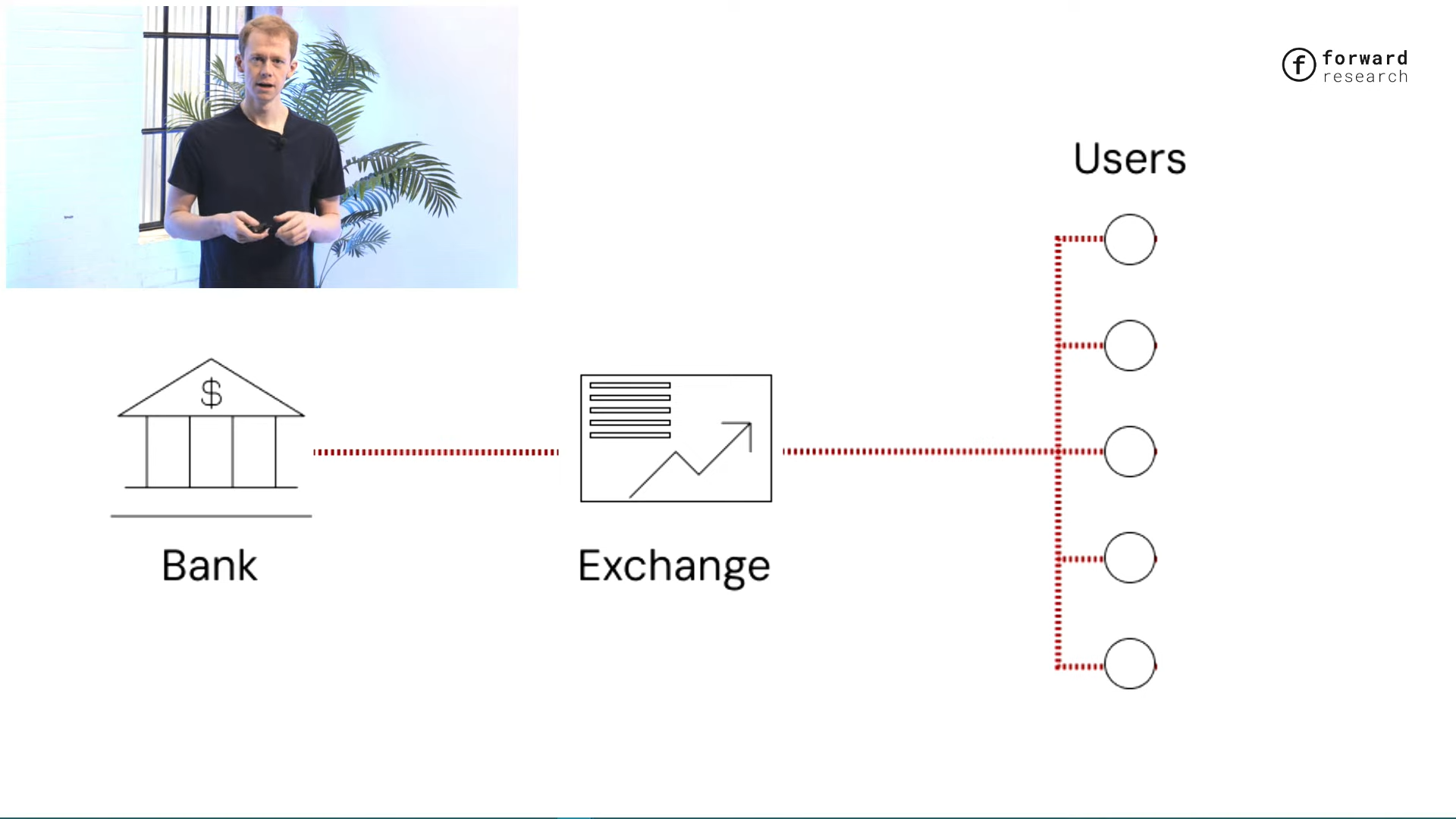

We are used to using encryption in our internet connections. For example, when you visit a bank or an exchange, the different parties interact over encrypted channels so that no one can eavesdrop. But these state transitions — such as the bank notifying the exchange that someone deposited funds, and the exchange executing the trade and notifying the user of the outcome — still rely on trust.

Every interaction in a distributed network is built on trust, and each participant is assumed to accurately report the results of state transitions. Each service runs independently and maintains its own state, but there is no record of how these states were reached. This leads to a fundamental problem: you cannot interact directly with untrusted services. This is not just a theoretical problem, but a very expensive and common situation in reality.

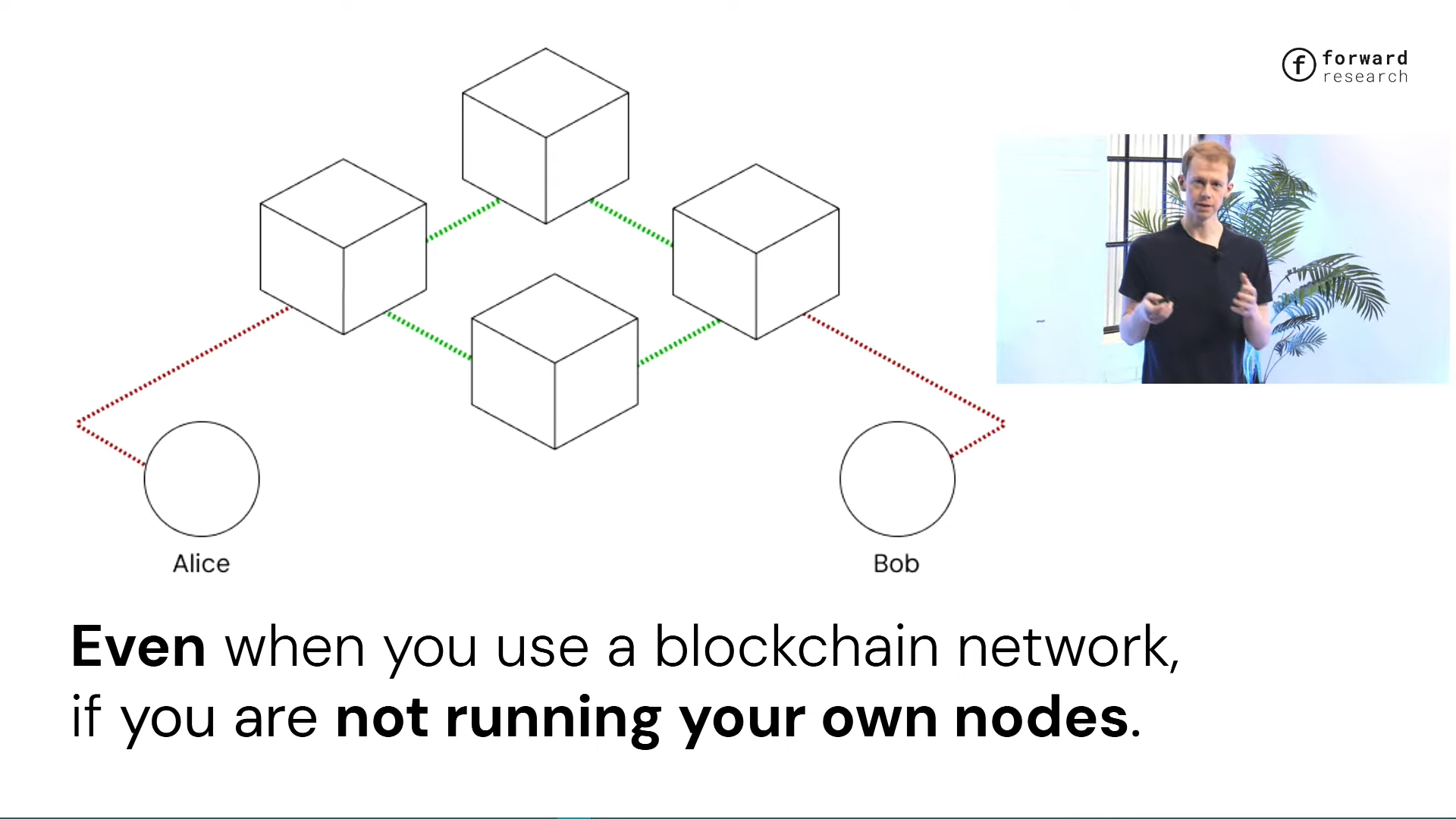

Even when using a blockchain network, you still have to trust the RPC provider or gateway provider to tell you exactly what is happening. Every connection on the Internet is unverified by default. You can overlay a blockchain network, but if you still interact with old protocols and services, you can't be completely sure what is happening.

There are indeed many examples where centralized services at the edge of blockchain networks — such as RPC providers — have caused problems for users. For example, the interface of a decentralized finance (DeFi) network was hacked, and users thought they were interacting directly with the network, but in fact they were not. This is just one of many examples. In traditional blockchain networks, although the core part is trustless, the channels connected to the blockchain still require trust, just like the rest of the Internet. We are like building small islands of trustless computing in a vast network of trusted computing and communication.

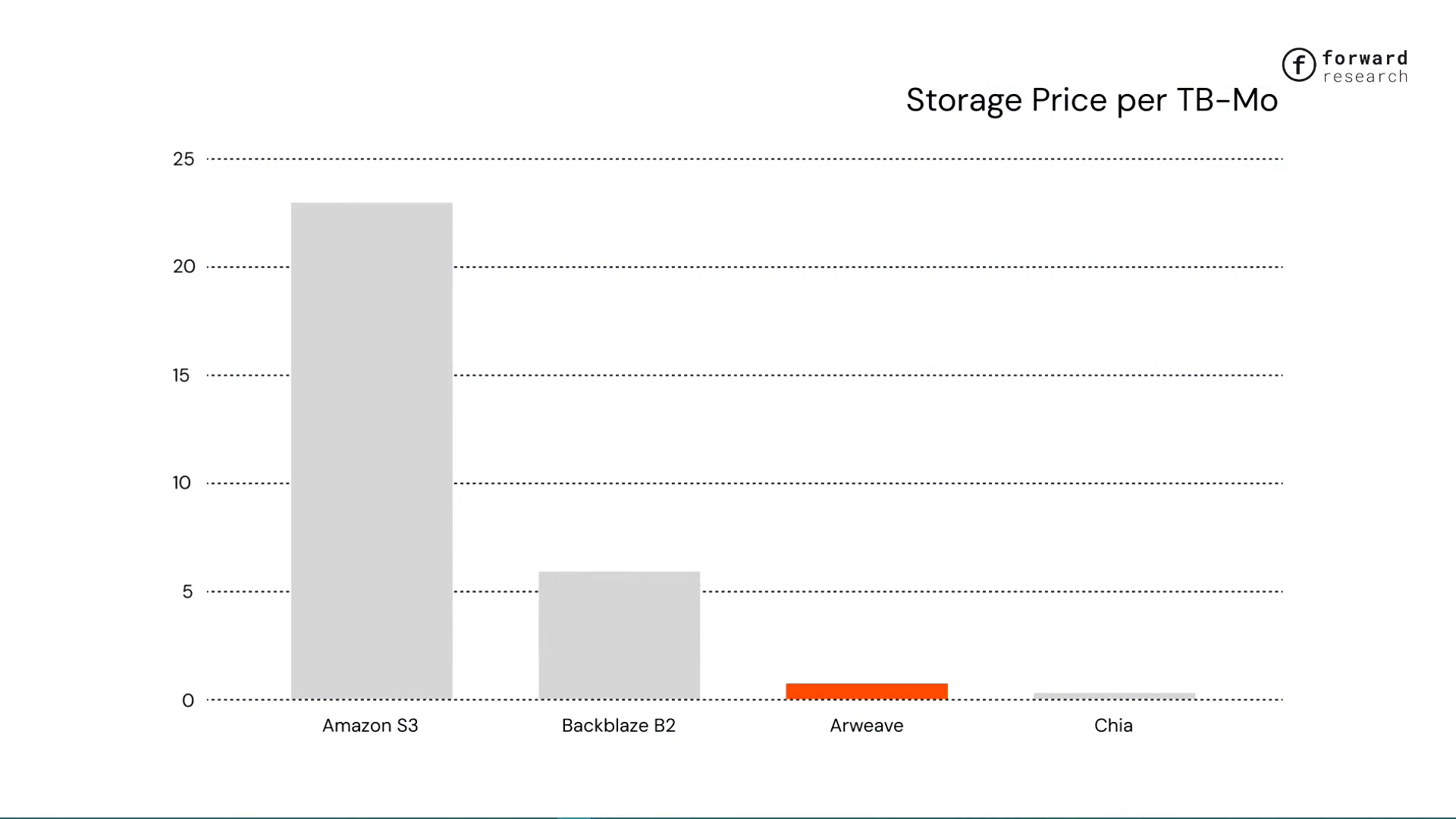

The cost of trust cannot be ignored. This is true from many perspectives, not just the hardware supply. For example, the Arweave network charges about 78 cents per terabyte per month, while the cheapest centralized provider Backblaze charges $6 and AWS charges as much as $23. Why can Arweave miners provide storage space at such a low cost, but Amazon and Backblaze cannot?

The answer is that Arweave miners don’t have direct access to users, and users don’t trust them the way they trust Amazon. Amazon has built trust through its brand and reputation. So when businesses need to buy cloud storage, they choose Amazon over Backblaze, even if the latter is only a quarter of the price of the former.

Decentralized physical infrastructure networks like Arweave allow users to connect to remote providers with minimal trust. If you trust the Arweave network - it's completely transparent, you can audit it yourself, understand its mathematical principles, and know that it ensures data storage through a trustless verification mechanism - then you can use it. You can deploy files, data, applications, and even network services to it. There are currently 15 billion messages on Arweave, and people have done a lot with it. As long as you trust the network itself, you don't need to trust the underlying storage providers. Although these providers have storage resources, they cannot sell them directly to corporate customers because corporate customers don't trust them yet. Therefore, in these systems, the cost of trust is very high, and it's not just reflected in the hardware.

The same comparison applies to traditional financial services. For example, people choose to buy ETFs from large institutions such as Fidelity instead of small ETF providers because they trust these large institutions. If you look at the trading volume of these services, you can see that users pay a huge trust cost for this essentially algorithmic service - in fact, there is no need to rely on trust.

AO's approach is to mimic the architecture of the Internet and create a computing primitive that runs parallel to the network. It uses the same "Actor Model" as the Internet: multiple services interact asynchronously, such as banks and exchanges communicating with each other through messages, and only communicate when needed. This is completely different from traditional blockchain networks - in traditional blockchains, everyone's transactions are transactions for the entire network. For example, on Ethereum, if someone trades on Uniswap, the entire network will stop to verify and ensure that the state transition is correct. This does provide strong verification, but it is extremely inefficient and will never reach the scale of the decentralized supercomputer we hope to achieve with AO.

AO also provides a flexible modular mechanism to minimize the need for trust between the parties in the system. AO-Core is essentially a simple primitive that expresses "this is a computation, this is the output", and ties it to a proof from a party, so that errors can be proven - if something goes wrong, you can see it happen. At the same time, it also allows you to combine these proofs. You can have as many people verify the correctness of the interaction as you want, no more, no less. This is the core of AO-Core, and everything above is just an application of this system and modular composition services built on the same basic primitives.

Simply put, AO-Core integrates the concept of blockchain into the HTTP layer. We refine the concept of blockchain to its most basic elements and express it in a language that is widely understood and supported.

We try to integrate blockchain primitives into existing infrastructure, rather than creating a whole new world for everyone to adapt to. This makes it easier to adopt. All this is thanks to a new Internet standard that was approved last year.

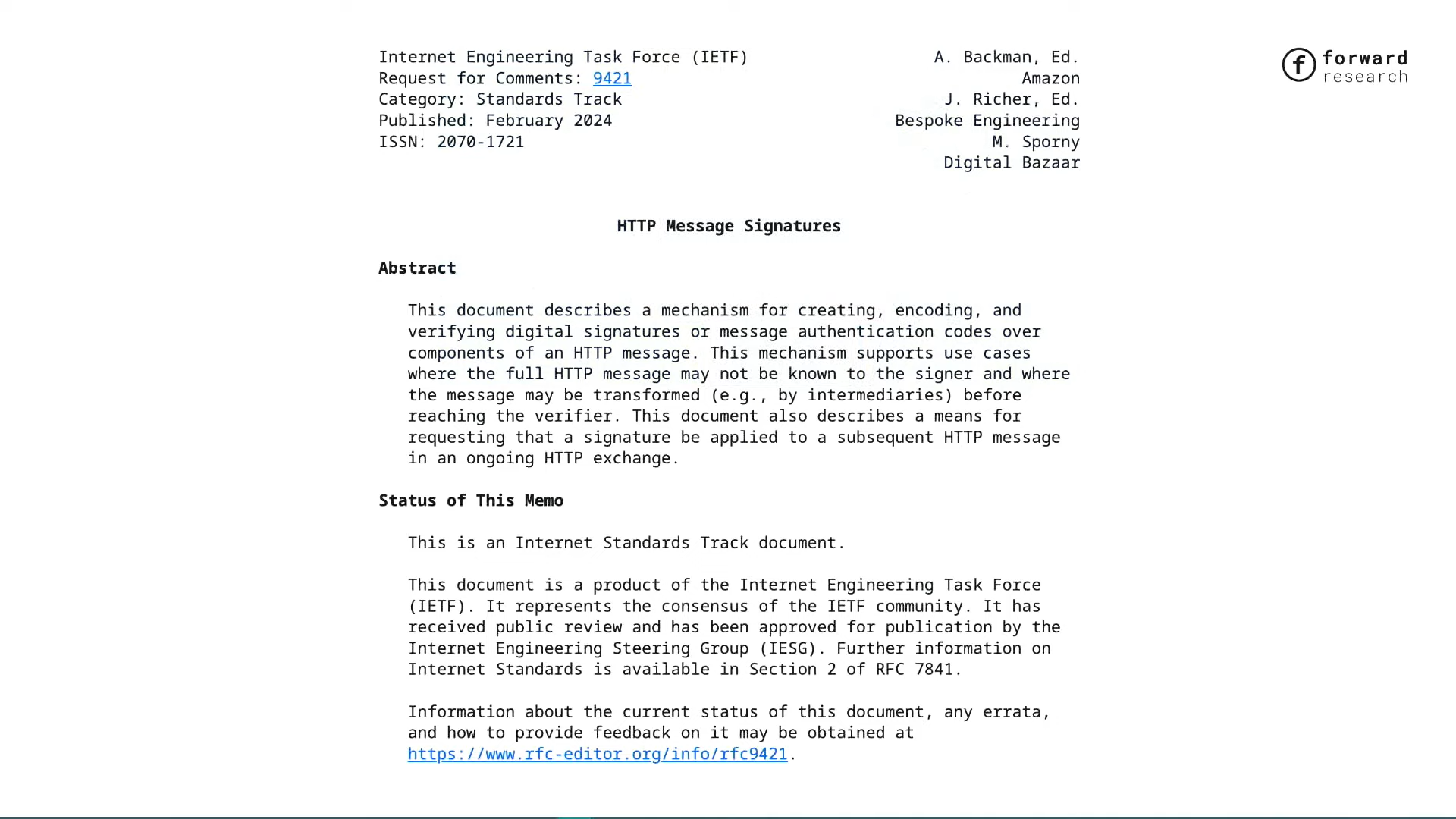

HTTP Message Signatures

Interestingly, this standard almost coincides with the launch of the AO testnet, and it is called "HTTP Message Signatures". As the name suggests, it allows us to embed signatures in HTTP requests and responses. We were very excited when we saw this standard because Arweave's data item format is somewhat similar to HTTP headers and bodies. This allows us to represent data on Arweave directly as HTTP requests and responses, without the need for intermediate format conversions, and to immediately take advantage of all tools that support HTTP message signatures - which are supported by default in almost every programming language.

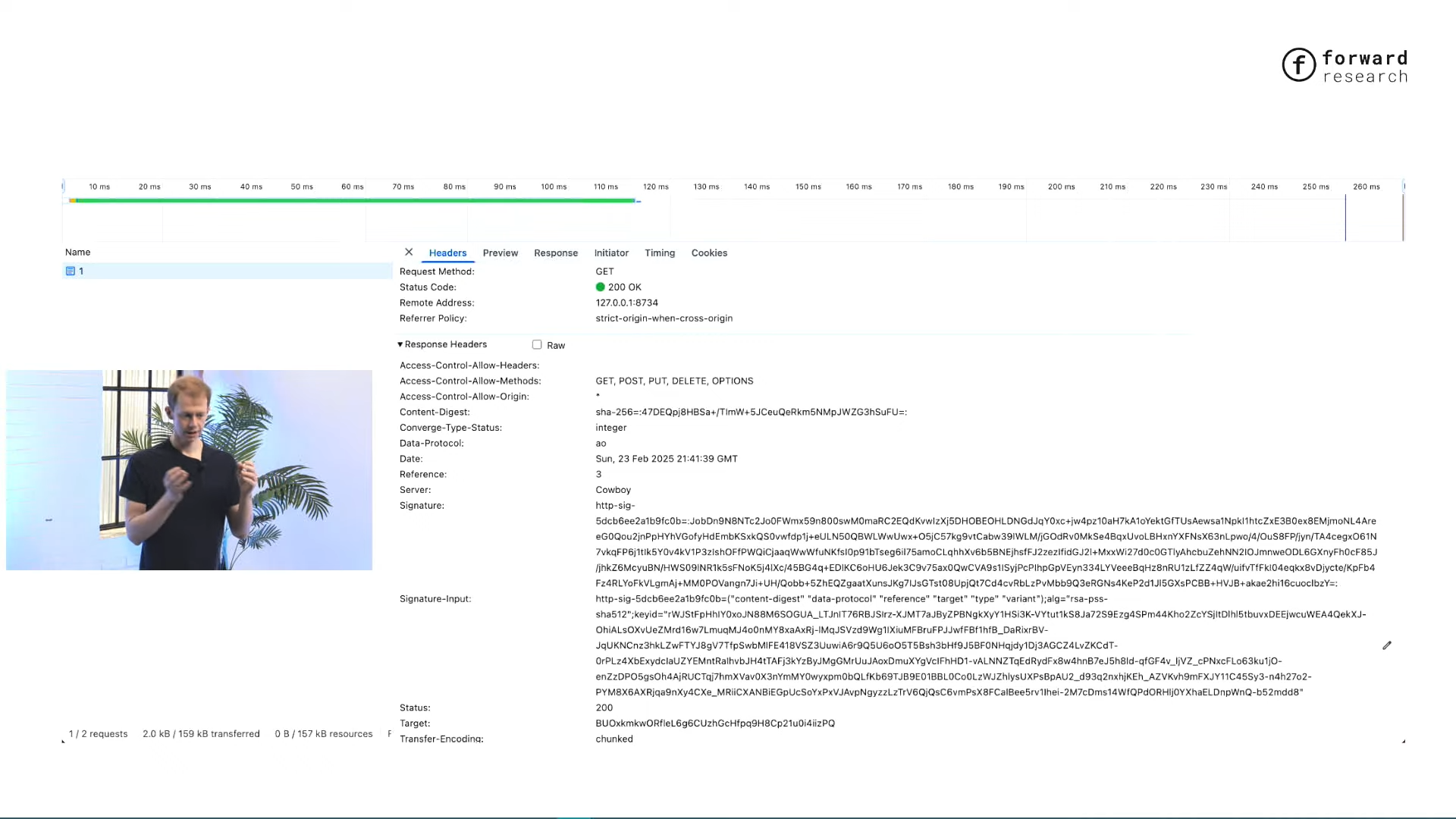

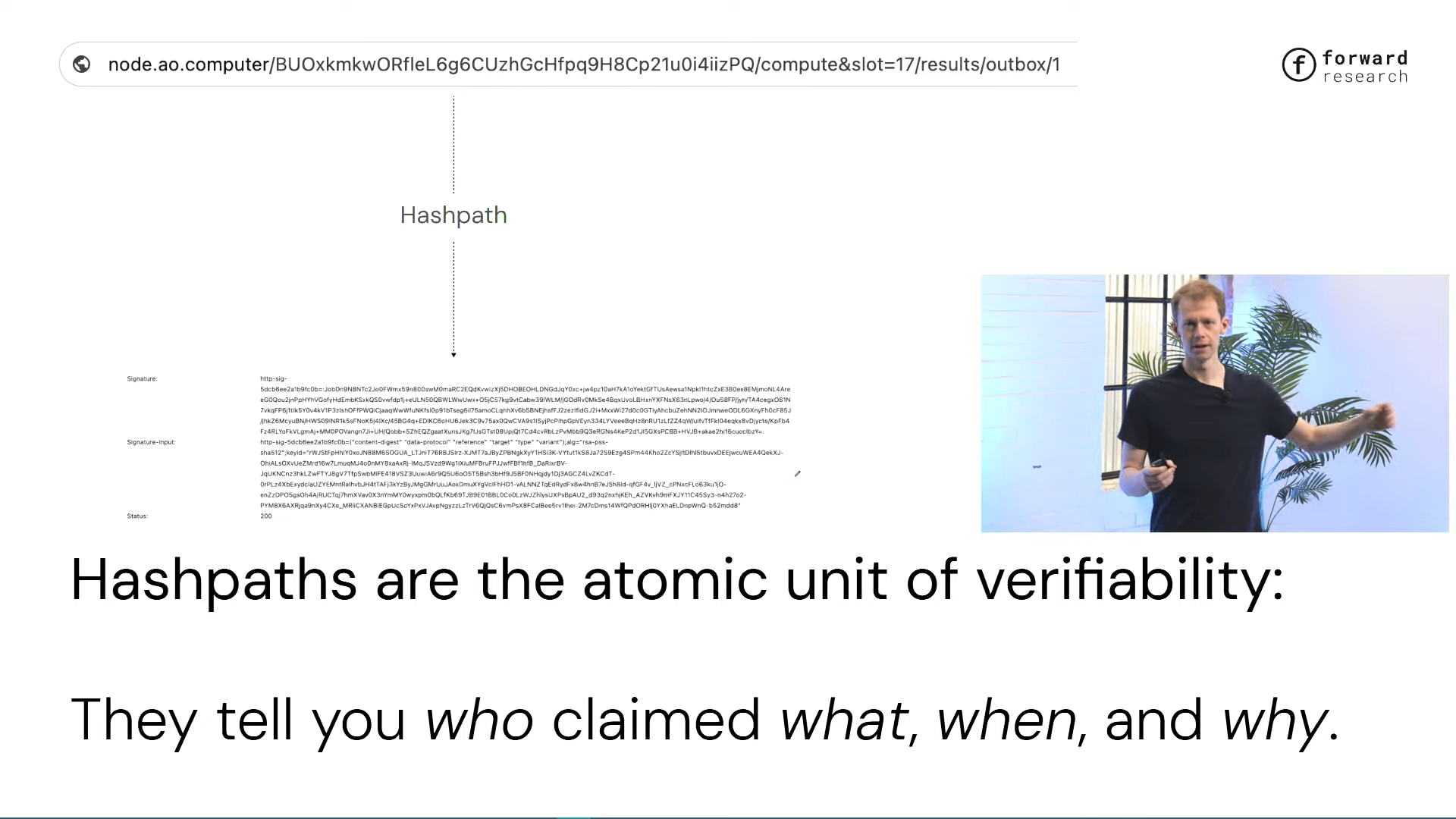

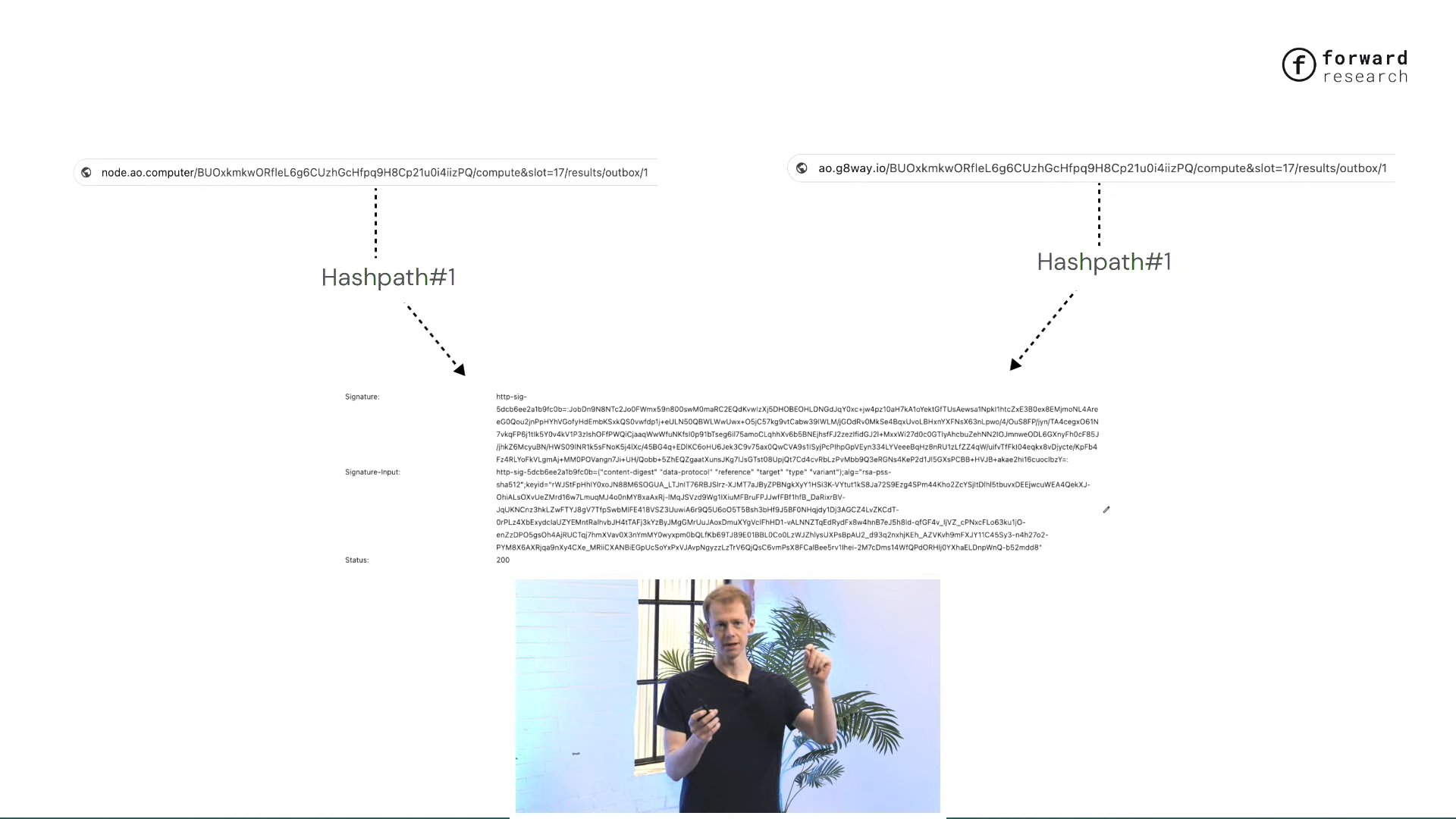

When you receive a response from an AO-Core node, such as in a browser, that last link that was previously unverified - unless you run a blockchain node yourself (which almost no one does, it's too unrealistic) - now has a signed message. You can verify this signature, which shows that a node has attested to the correctness of a resource (which can be data, a service, or a state representation). This is one of the two basic building blocks of AO-Core.

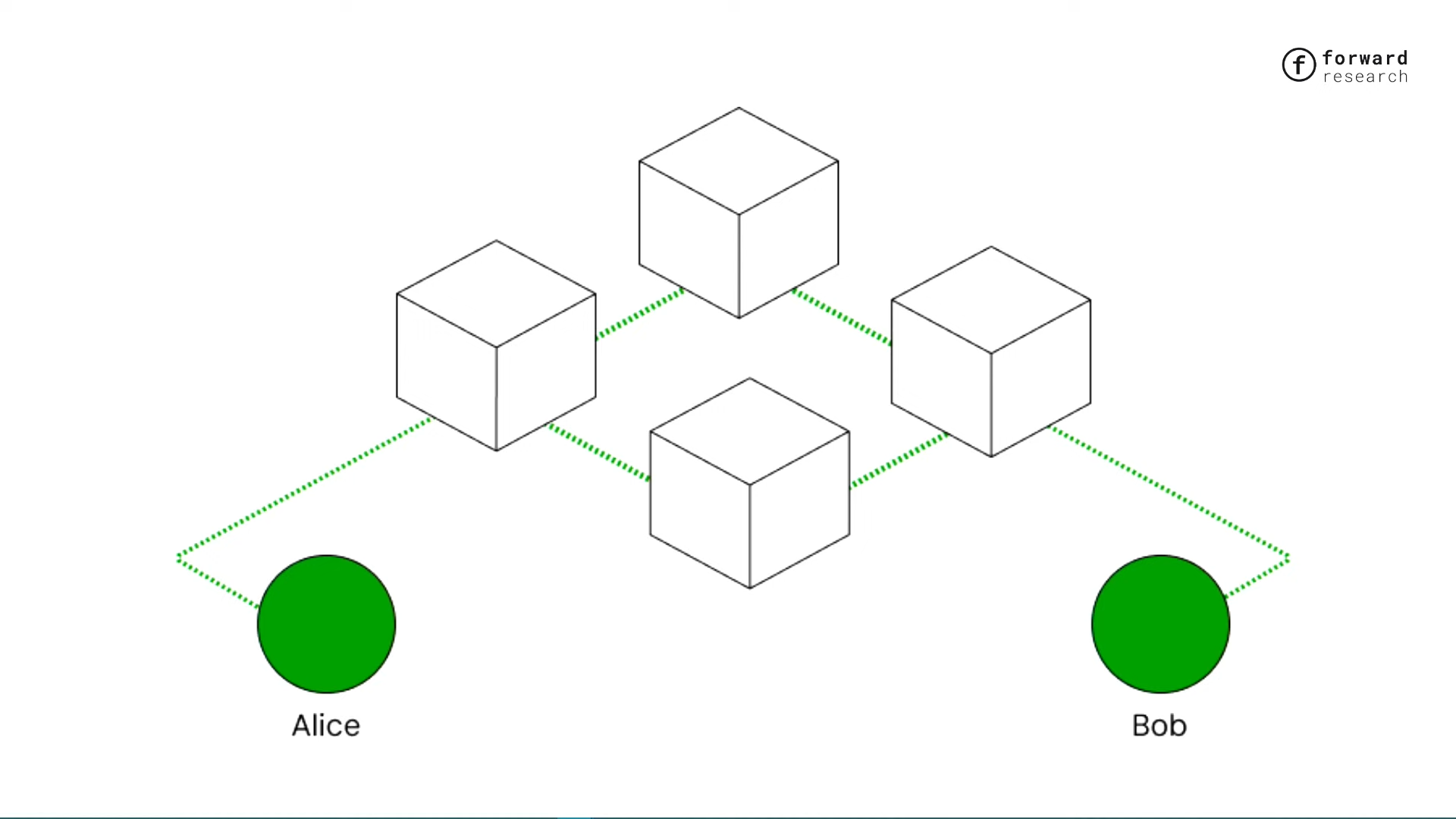

Now, not only is the network verified between nodes, but connections to edge users (such as Alice and Bob) can also be verified and are equally trusted as connections between nodes.

Hash Paths

Another building block of AO-Core is "Hash Paths". Messages on the Internet are usually chained, such as a bank notifying an exchange user to deposit funds, and the exchange notifying the user of the balance update. These are interrelated interactions: one message changes the state of another service, generating a new message to pass to the user. We can link these interactions in a blockchain way-message 1 occurs, message 2 occurs based on this state, and finally reaches state 3. A hash path is simply a representation of a series of interactions: a series of transaction records based on the initial state and program. If you are familiar with the AO ecosystem, you will know that its "Process" layer records the input log on a process, and combined with the program and initial state on Arweave, the output can be recalculated.

AO-Core goes one step further: every message in the system, every state proof, and even messages between nodes, constitute a micro-blockchain. For example, a user sends a message to a node, the node gets the schedule of the process, gets a response and performs the calculation, and then returns the result to the user. Every message in the whole process builds a small blockchain. This brings some interesting features: for example, a user requests a bank to transfer money to an exchange and complete the transaction, affecting five people, and ultimately everyone's message contains the hash path that generated the output - like a Merkle root containing all the interaction history. This output goes directly to the browser, and users can verify it with existing network infrastructure.

A hash path is like a verifiable atomic unit that tells you “who said what and when” and, more importantly, “why”.

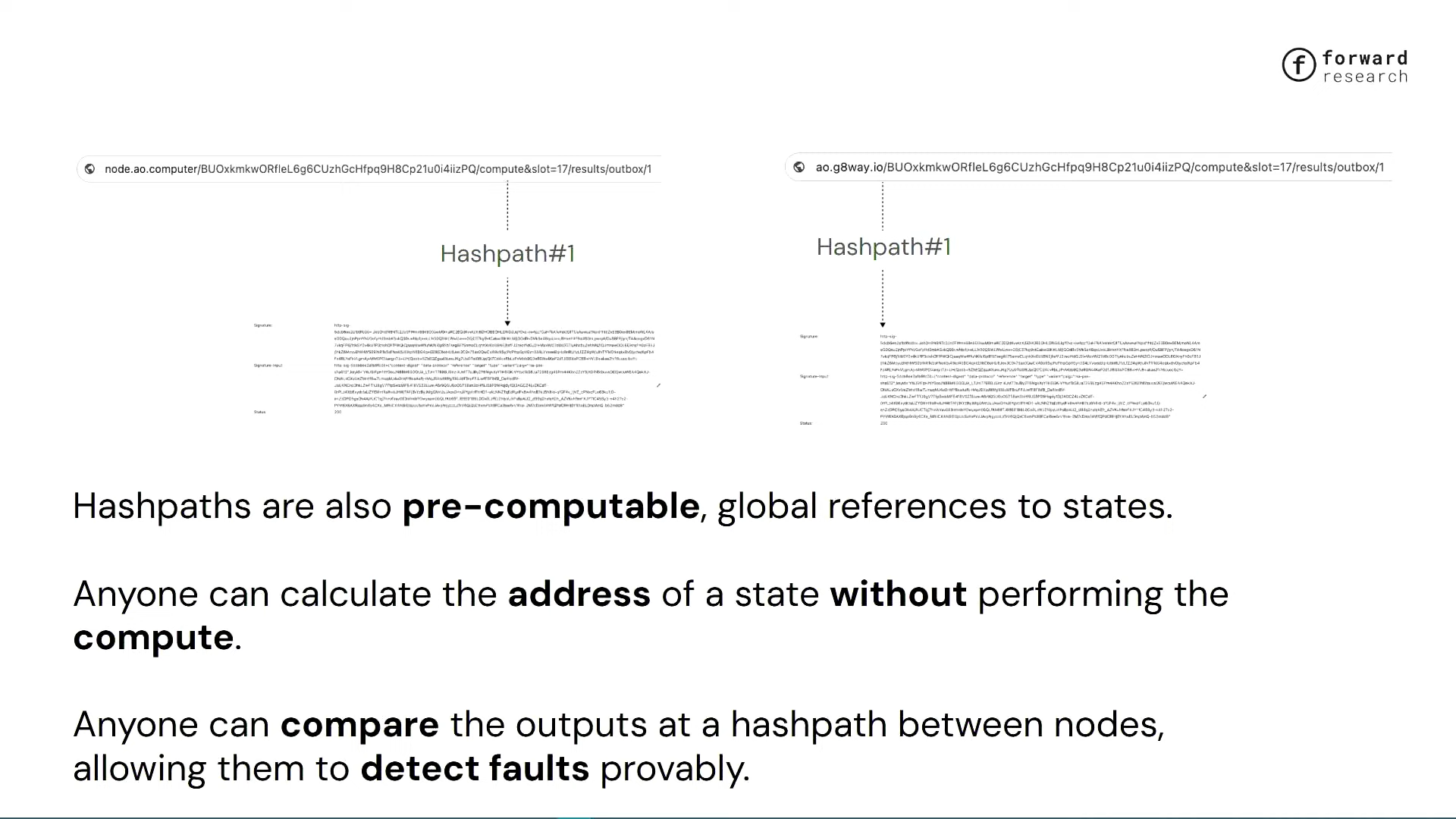

Another interesting property of hash paths is that they are precomputed. This solves a fundamental problem of super-parallel networks such as AO - there is no forced consensus, the ordering is flexible, and users can choose the order they want.

You may face multiple different paths. In traditional blockchains, to know the state after a transaction, you have to recalculate the entire chain. But hash paths allow users to pre-specify "what is the result if A to B to C is applied", and you can calculate the state identifier just by knowing the message identifier. It's like a hyperlink to the calculated state, embedded with network primitives. You can use it to compare the outputs of different nodes on a specific hash path. If Alice, Bob, and Charlie say the state is X, and Dennis says it is Y, you can find the disagreement and solve the problem through the resolution system.

Hash paths can also combine state proofs. HTTP signed messages support stacked signatures, similar to multi-signatures. Originally this was designed for proxies or caches, but AO uses it to combine proofs from Alice, Bob, and Charlie. If Dennis disagrees, the disagreement can be seen and the failure can be resolved accordingly.

Another highlight is that the choice of signature algorithm is completely flexible. HTTP signature messages are based on the RFC 9421 standard and use SHA-512 by default, but other types (such as ECDSA or RSA) are also supported, and can even be mixed and still be verified, which is very unique in existing production environments.

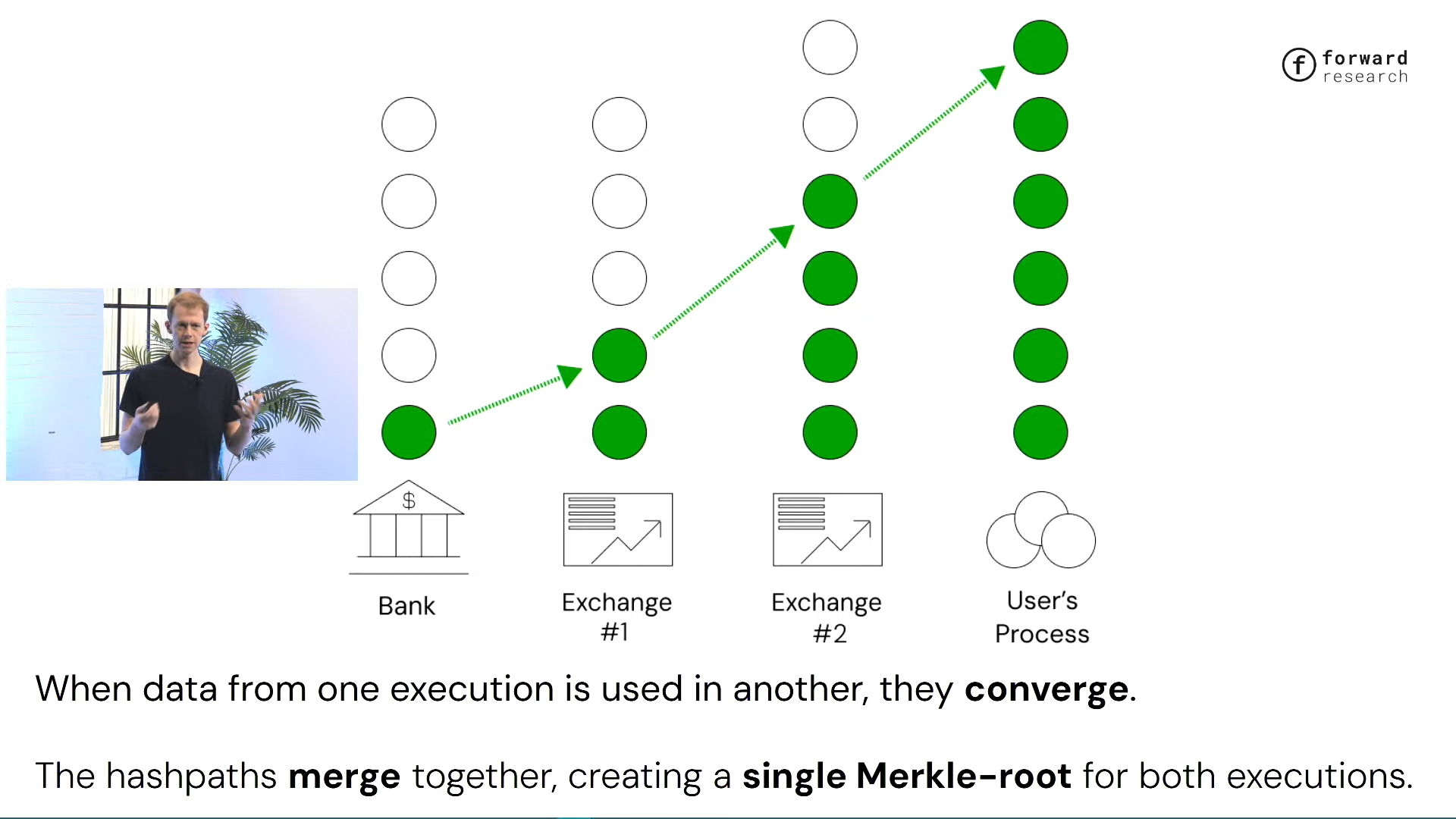

We don't fully understand the full significance of this yet, but there is one observation worth noting. Imagine a system with 4 participants, such as a bank (which may be a token process in AO), an exchange (perhaps a liquidity pool), and a user process. As hash paths are passed between these participants, the paths are gradually combined. If the user process generates a message for the 6th interaction and passes it downstream, you will get a Merkle root as a proof. This root can be used to verify the previous state of other processes. If expanded to the maximum scope, the interaction history of every process and service in the network can theoretically be condensed into a 256-bit Merkle root, and the interaction positions can be traced back.

This feature is quite interesting. It allows to exploit single network root validation errors or implement other functions. In addition, because it is based on RFC 9421, you can adjust the hash path algorithm to only accept valid paths with proof of underlying state transitions. For example, you can use zero-knowledge proof or TEE (Trusted Execution Environment) authentication and only include TEE verification results. In this way, the final Merkle root contains state proofs for all messages, which you can verify. For example, holding a zero-knowledge proof or a TEE node signature can confirm that the 6th output of process No. 5040 is specific data, because downstream nodes need to provide proof to accept the path.

This mechanism has great potential. We are still exploring its application prospects in 5 to 10 years, similar to the far-reaching impact of Arweave's permanent storage primitive. In large-scale applications, it provides a unified Merkle root that covers almost all computations of the network.

Since consensus is not enforced, there is no single root in the system, but rather multiple roots generated at different points in time. However, proofs are naturally passed and constructed as systems interact, which is not only attractive but also comes at no additional cost.

This process happens spontaneously, similar to state verification or some kind of consensus, depending on the hashing algorithm. As a byproduct of distributed systems, it echoes the way the Internet works. This mechanism works naturally without adding transmission complexity. For example, Alice pays Bob a token to watch a video in a small transaction. When Bob settles, the token propagates with the hash path to the liquidity pool of his transaction, and then quickly expands to the rest of the network. This forms a fuzzy consensus, or at least the verifiability of state inputs, which gradually advances as the network is used, without additional communication overhead.

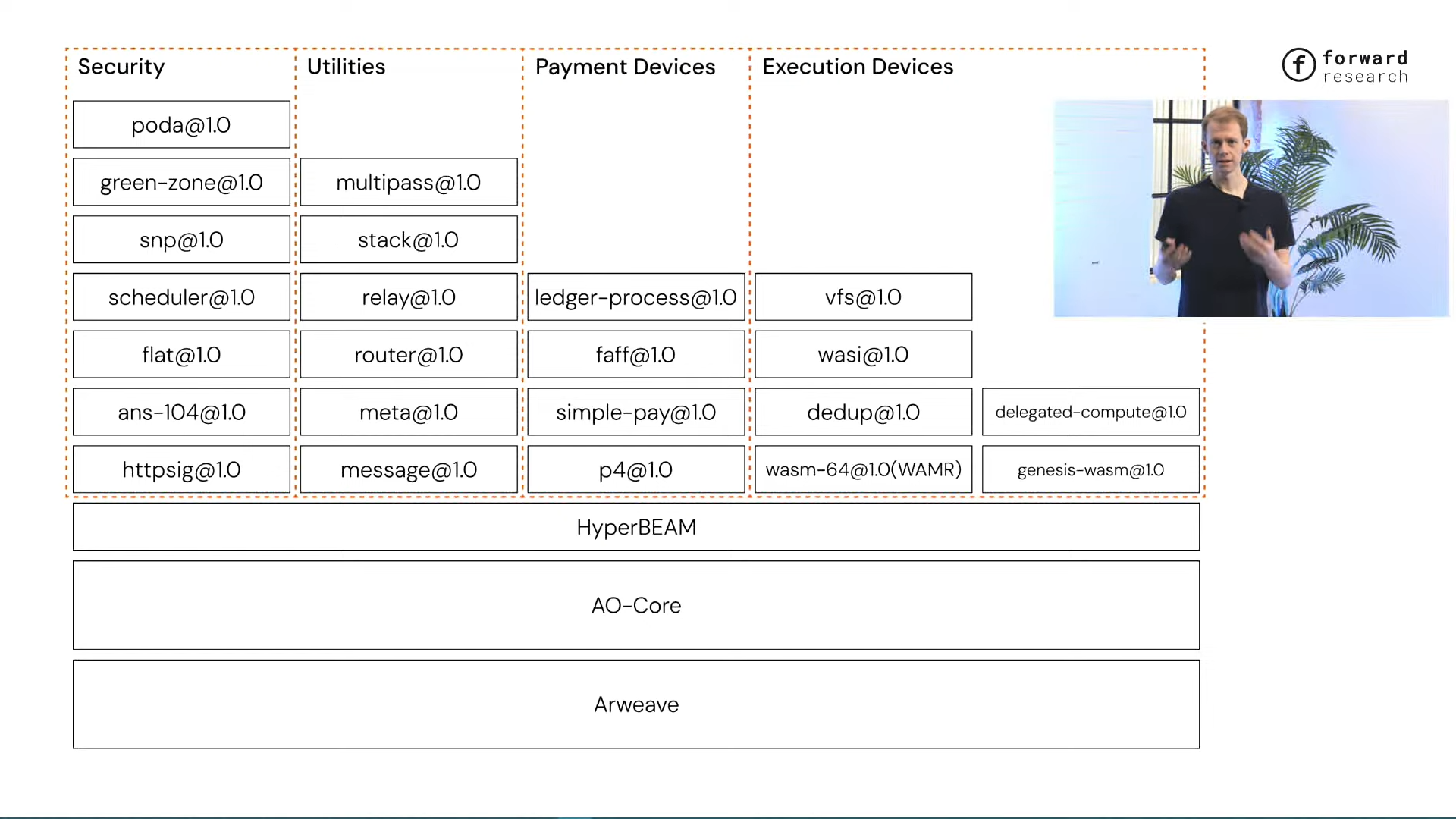

This feature is quite valuable. As a primitive, AO-Core does not enforce a single virtual machine or security mechanism. It only requires the proof mechanism and hash path transmission in accordance with RFC 9421, and does not limit specific usage rules. Its architecture adopts a "device" system, that is, a small state transition rule, similar to a blockchain component, but decomposed into independent and composable units, which is reusable.

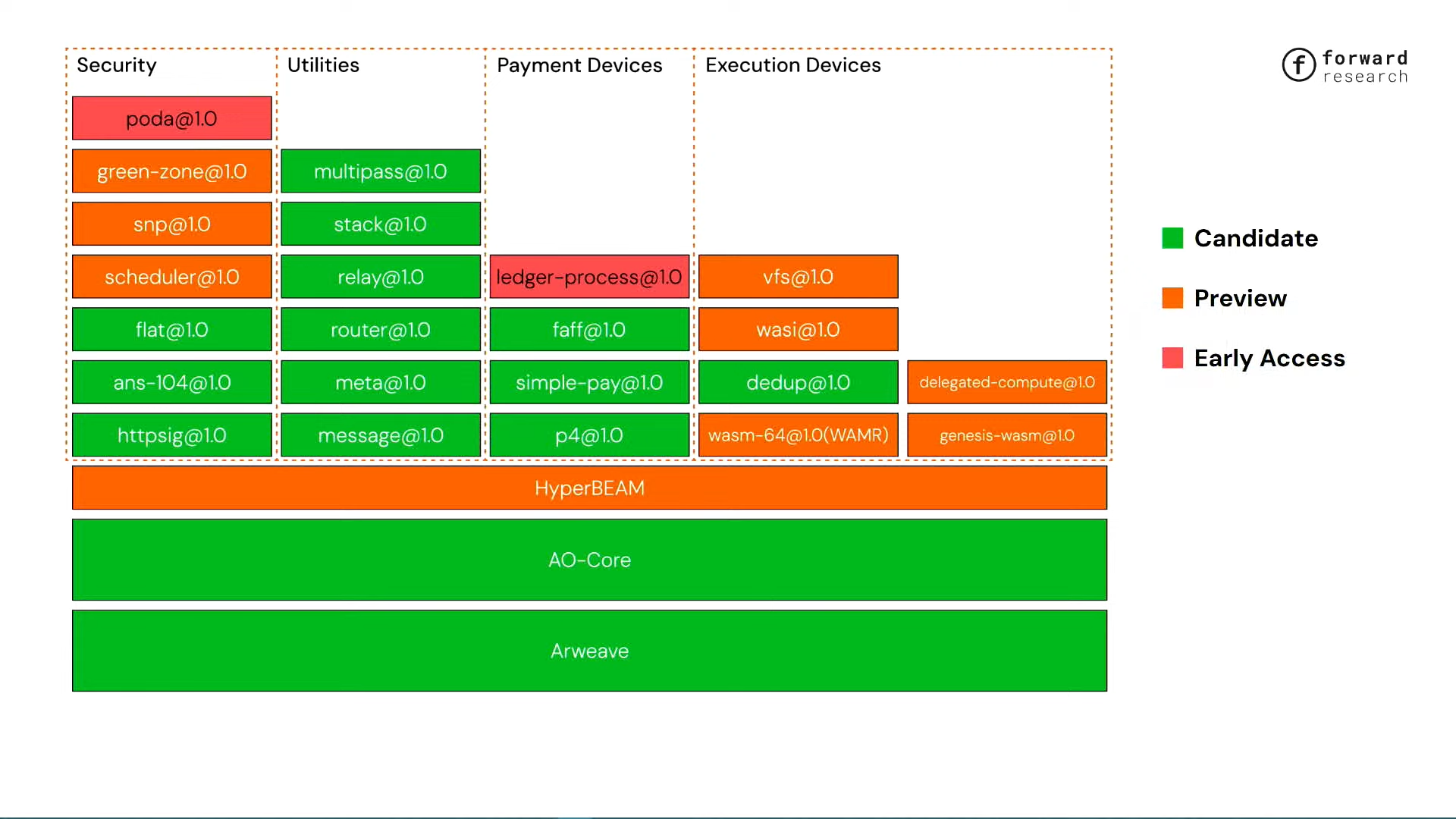

For example, the AO process is built on AO-Core, which requires at least 6 devices, but in reality there are more than 10 to 20 devices, which can be flexibly combined, such as replacing the scheduler or execution engine. Similar to the toolkit concept of Cosmos and Polkadot, AO-Core provides prefabricated modules that allow on-demand assembly and are equipped with a decentralized network. Today we started the construction of node operators. To start a new blockchain, you only need to send an HTTP message to the Hyper Beam node URL. New features can be added by introducing only a single device, and the node operator can choose whether to run it or not, forming a supply and demand market for users and operators, and driving the development of the device execution ecosystem.

How to use AO-Core——HyperBeam

The AO testnet was launched 1 year ago, and the prototype started about 18 months ago, including scheduling, messaging and computing units, which have now been transformed into pluggable devices. In the AO-Core mainnet, the process device coordinates about 20 downstream components, and the HyperBeam platform (the implementation of AO-Core) was born, which is based on the reconstruction of the Erlang virtual machine, modular infrastructure, and supports the development of diverse devices.

AO runs on Hyper Beam, and the device stack works together to provide a unified user experience, including execution, payment and data conversion tool devices, and other security devices to provide proof of hash paths and results.

Hyper Beam can be considered as the operating system of AO devices. The open platform allows node operators to select devices, and users can assign tasks based on device type and pricing. Compared with the global pricing of traditional blockchains, AO does not have this requirement. Operators set their own prices through payment devices, and users can compare and choose. Each request is a blockchain transaction, and developers can specify the AOS process to output web pages and present dynamic information in real time.

For example, I send a message through the AOS console, specifying the HTTP type (application/html or text/html) and rendering data, and the browser generates a page when accessing the URL, with a hash path and signature to prove the accuracy of the data. This feature has additional uses and can be seen as a side benefit of the system design.

Its obvious functions are actually byproducts of larger systems, such as smart contract output APIs. It is similar to a web page and can return prices or balances for browser access. Liquidity pool services such as Dexi or Permaswap, which traditionally require "dry runs" and consume computation, can now directly output browsable resources through processes.

A more basic feature is that the state and hash path of the device output can be cached. Hyper Beam nodes support the construction of CDN, cache results and merge signatures, and use mature network infrastructure to improve response speed without developers having to run dry. In addition, it can achieve non-locked server functions at a cost much lower than AWS.

AO-Core does not enforce consensus rules, which enables it to provide services that do not require consensus but are verifiable with blockchain. If the device is properly configured, the node does not need to rely on the scheduling mechanism to determine the order of operations, and only executes user requests based on the current hash path. For example, the user specifies "run X on path", and the node generates a new path Y after applying it. This feature supports the operation of WebAssembly containers, a server function framework similar to AWS Lambda, outputs results and comes with proofs, and takes into account flexibility and reliability.

Based on the security mechanism, users can confirm the accuracy of the results. This service is based on a decentralized network, there is no need to trust a single provider, the hash path is mobile, and there is no lock-in.

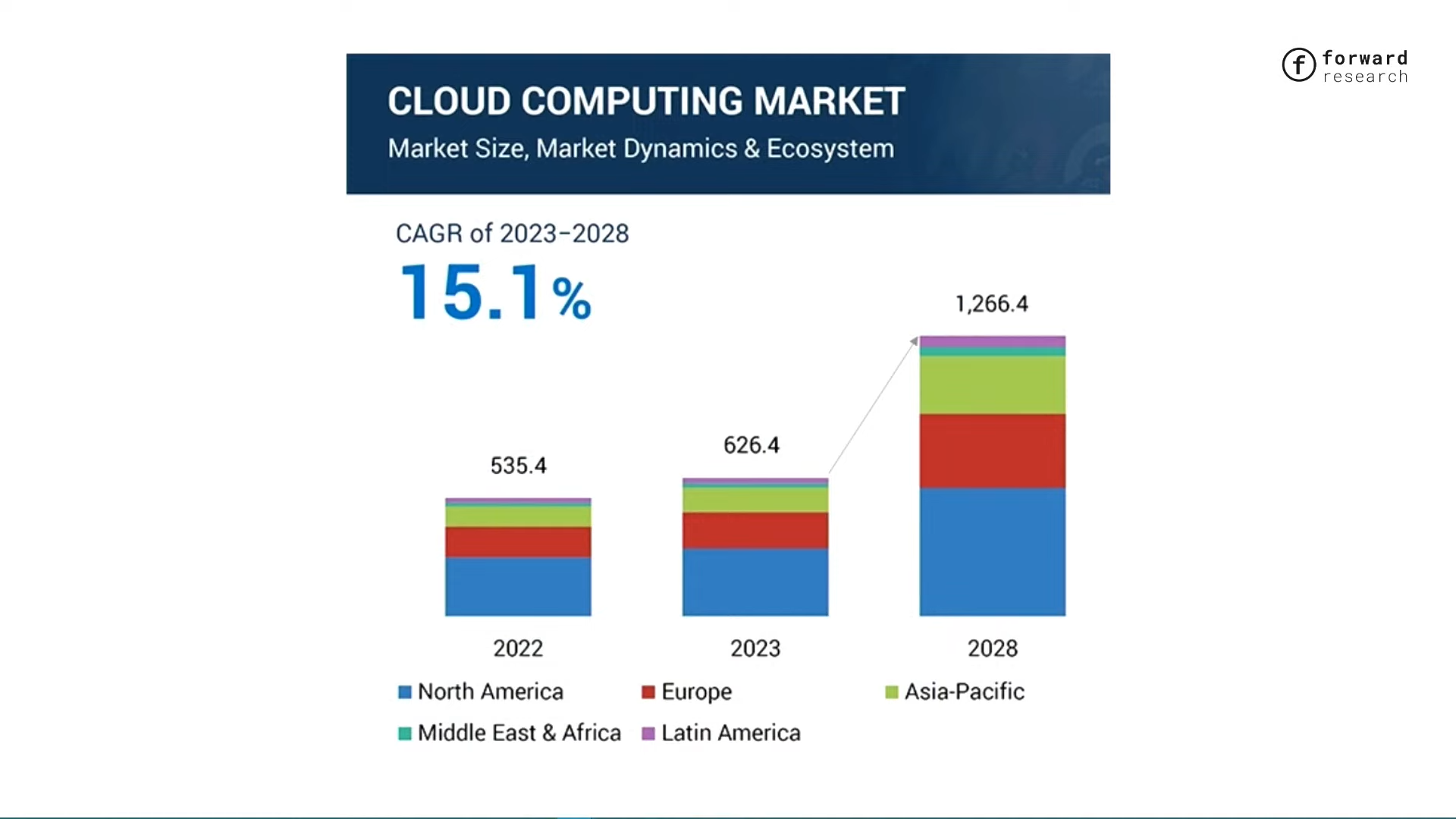

The significance of this capability can be measured by the size of the cloud computing market, which has revenues in the hundreds of billions of dollars per year, highlighting its potential impact.

We can now provide security that is comparable to or even better than AWS, connecting hardware resource providers and demanders. Although these providers do not have the brand trust of Amazon, they can achieve low-cost services through AO-Core. Although the specific price is unknown, it is reasonable to speculate that it will be significantly lower than the current level, which is a major highlight of the system.

Another point is to simplify the construction of the DePIN network. You only need to add state transition components to reuse the existing stack, which significantly reduces the difficulty of starting. This feature is exciting because DePIN rises from trust-minimized arbitrage and provides low-cost services with the same trust as Amazon.

This feature has great potential. Hyper Beam can be regarded as a DePIN operating system, and the AO process is only one of its applications, supporting multiple scenarios such as storage and computing. Node operators choose devices like an app store, and the new network is connected to the hardware provider network on the first day. Some people have already started trying it.

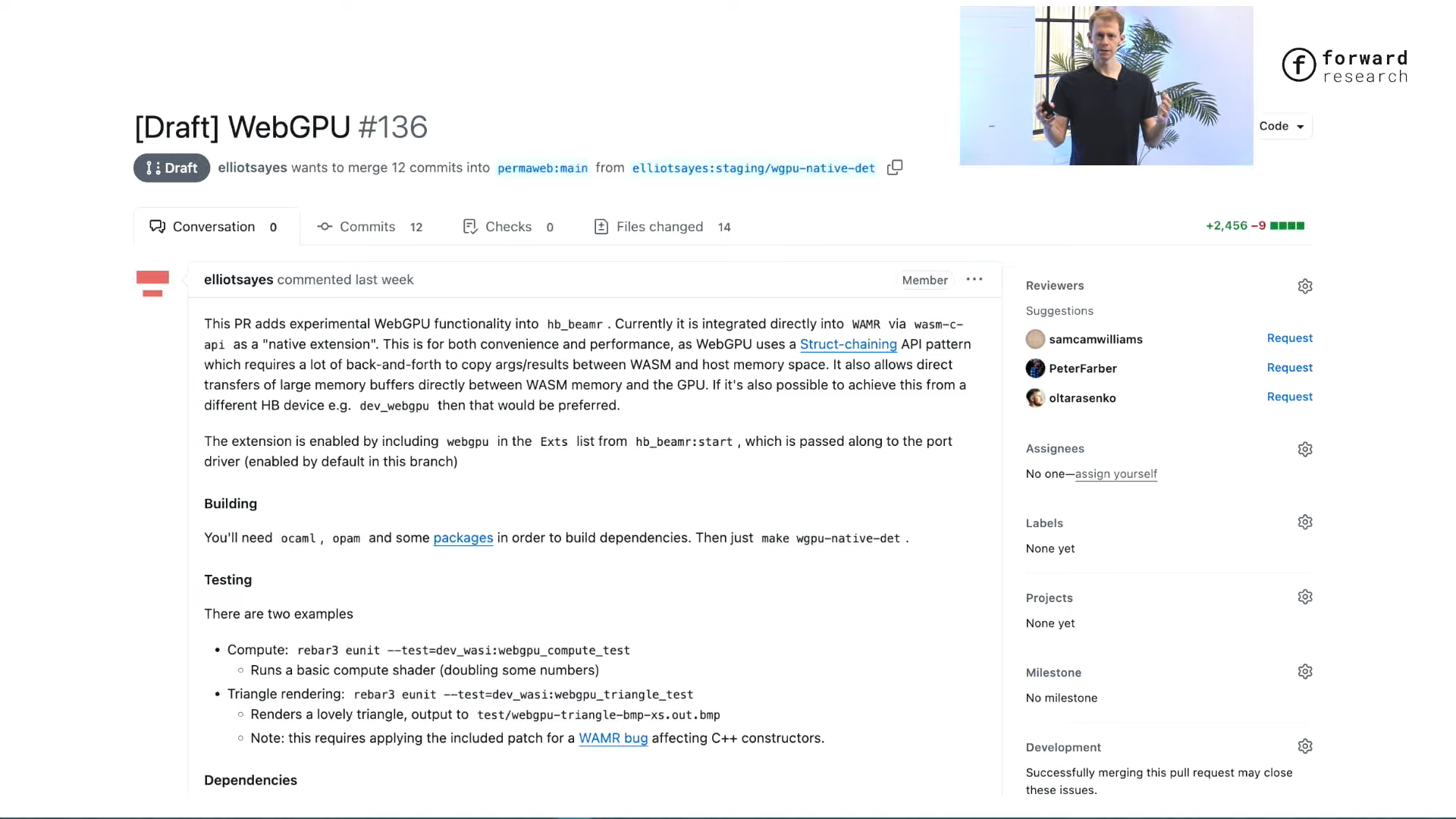

When we introduced Hyper Beam a few months ago, some ecosystem members had already started developing it. For example, a pull request added Web GPU support, making GPUs available for HyperBeam execution, and nodes download code directly from Arweave. This feature makes the URL bar Turing complete, and components reuse names to show system flexibility.

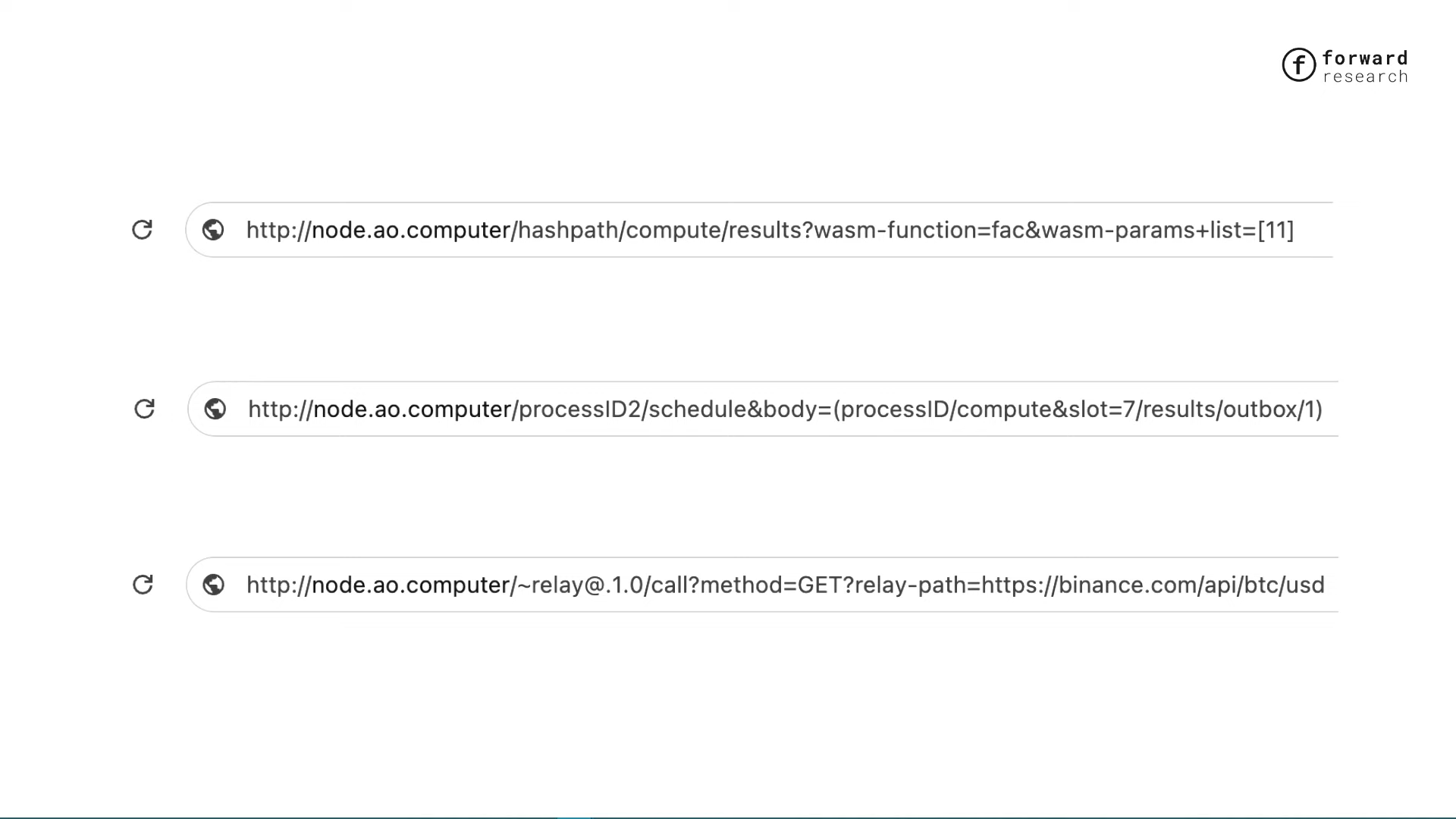

For example, calling "compute" in the hash path, running the WASM factorial function (parameters 1 or 11), the URL bar becomes a general computing tool. The scripting language can combine URLs, such as scheduling process output, highlighting the flexibility of the system.

Based on existing technologies, HTTP signed messages support multiple programming environments (Rust, Go, or C#), are compatible from day 1, and eliminate the need for independent library maintenance. Data is packaged and uploaded via Arweave and can be used out of the box.

How do I start running a HyperBeam node?

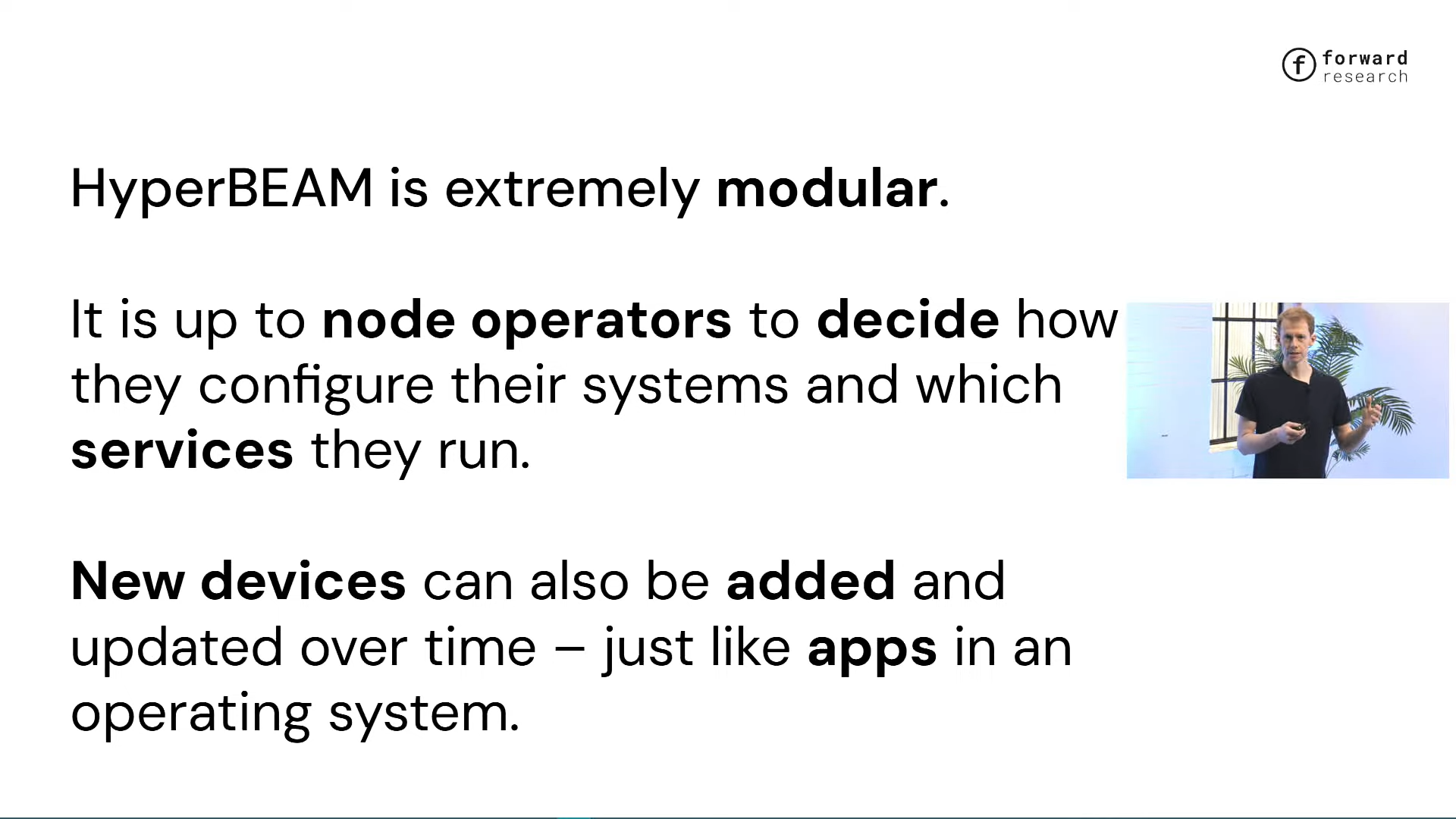

Now that you understand the uniqueness of the system, you can better understand the significance of running a HyperBeam node. Its modular design allows operators to determine services and pricing, and equipment installation is like traditional applications.

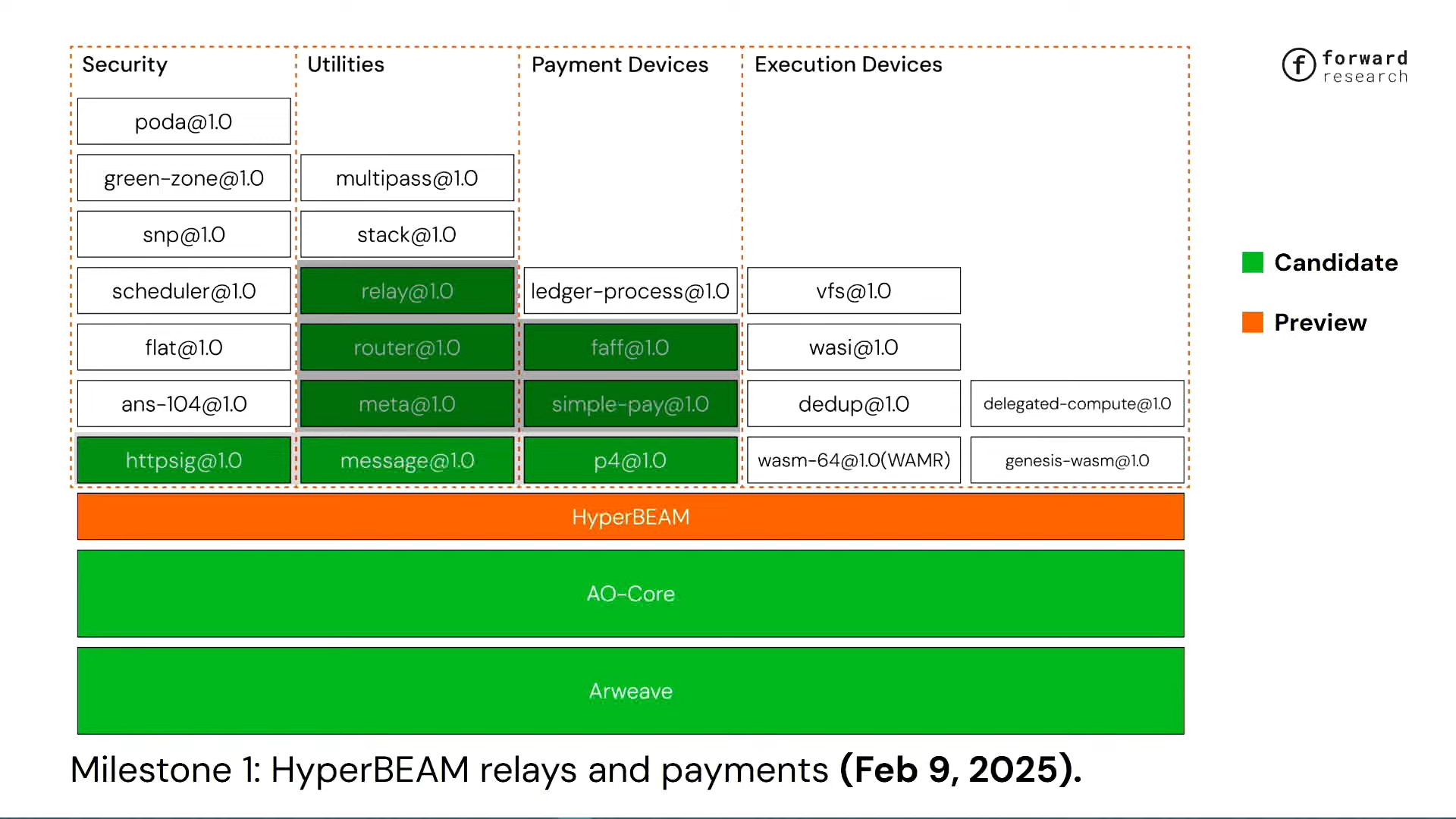

Currently, each device can be plugged in and out and upgraded independently, just like the app store of a normal operating system. We will release and update them regularly. We divide them into three maturity levels within Forward Research: early access, preview, and candidate releases. Candidate releases are stable, with minor updates, and follow the "Bitcoinization" principle to ensure the robustness of the AO-Core protocol, such as Arweave.

Today we released a preview version of HyperBeam (Beta), which can be run by yourself. AO-Core is stable, including green candidate devices and preview and early access devices for trial.

The AO ecosystem began with the launch of the testnet a year ago. It is exciting that users quickly began to conduct valuable transactions, which is exciting. The adoption rate and growth rate are extremely fast, and the momentum has not diminished to this day, forming an active network that many users rely on and cannot be abandoned at will, which is different from the frequent reset of regular testnets. AO uses holographic state technology to present the operation of smart contracts. Even if all nodes are shut down, users can still run nodes to restore the status by themselves. This feature provides the basis for a smooth transition to the main network.

This transition is difficult to achieve in a full consensus system because the mainnet and testnet consensus are incompatible, both for Arweave and Ethereum. However, the AO ecosystem does not need to be limited, and users have relied on the "legacy net" and only run the old version of the node implementation. We can replace the underlying mechanism in real time, introduce Hyper Beam nodes, and maintain the old network at the same time, share data and rules, and ensure a smooth connection.

On February 9, we released a payment relay device that provides a priority channel, and users can enable mainnet mode testing on AWS's AO Operating System (AOS). This feature uses payment devices to interact with the old network to improve the processing capacity of high-value traffic.

The old network was plagued by problems with free computing, which was initially used to attract users, but now users need to pay to ensure that transactions are prioritized and status is obtained quickly, and the Hyper Beam payment mechanism was born for this purpose.

The Milestone 2 preview version was released today, which supports Hyper Beam computing, including scheduling and message push functions, coordinates multi-process operation, and provides trusted proof in TEE (Trusted Execution Environment), relying on AO-Core through HTTP signed messages.

This version is compatible with the old network and is available from today. You can check it on GitHub. We look forward to your exploration. (HyperBEAM code base: https://github.com/permaweb/HyperBEAM)

Future directions

Our next phase will focus on full compatibility with the old network. The key is to connect the dispatch unit to Hyper Beam, obtain status and verifiable proof, and make the progress public and trackable. At the same time, I will further improve the maturity and stability of the equipment. Soon, we plan to achieve the verifiability of TEE (Trusted Execution Environment) and private computing functions, and its wide application potential will bring more possibilities to the system.

In the short term, we will also focus on polishing the WASM execution environment. Hyper Beam will fully support the near-native running speed through Ahead-of-Time (AOT) technology, combined with private computing and verifiable output, and node operators can benefit first. Currently, Web GPU and WASM NN devices have been put into use, supporting rendering and LLM (large language model) computing tasks, effectively expanding the functions of AO-Core, and GPU access can improve the node carrying capacity. .

While this technique is easy to understand for an audience familiar with the basics, it can be challenging to explain to a non-technical audience. Thanks for sticking around.